Every year, the artificial intelligence company OpenAI improves its text-writing bot, GPT. And every year, the internet responds with shrieks of woe about the impending end of human-penned prose. This cycle repeated last week when OpenAI launched ChatGPT—a version of GPT that can seemingly spit out any text, from a Mozart-styled piano piece to the history of London in the style of Dr. Seuss. The response on Twitter was unanimous: The college essay is doomed. Why slave over a paper when ChatGPT can write an original for you?

Chatting with ChatGPT is fun. (Go play with it!) But the college essay isn’t doomed, and A.I. like ChatGPT won’t replace flesh and blood writers. They may make writing easier, though.

GPT-3, released by OpenAI in 2020, is the third and best-known version of OpenAI’s Generative Pre-trained Transformer—a computer program known as a large language model. Large language models produce language in response to language—typically, text-based prompts (“Write me a sonnet about love”). Unlike traditional computer programs that execute a series of hard-coded commands, language models are trained by sifting through large datasets of text like Wikipedia. Through this training, they learn patterns in language that are then used to generate the most likely completions to questions or commands.

Language is rife with repetition. Our ability to recognize and remember regularities in speech and text allows us to do things like complete a friend’s sentence or solve a Wordle in three tries. If I asked you to finish the sentence, The ball rolled down the … you’d say hill, and so would GPT-3. Large language models are, like people, great at learning regularities in language, and they use this trick to generate human-like text. But when tested on their ability to understand the language they produce, they often look more like parrots than poets.

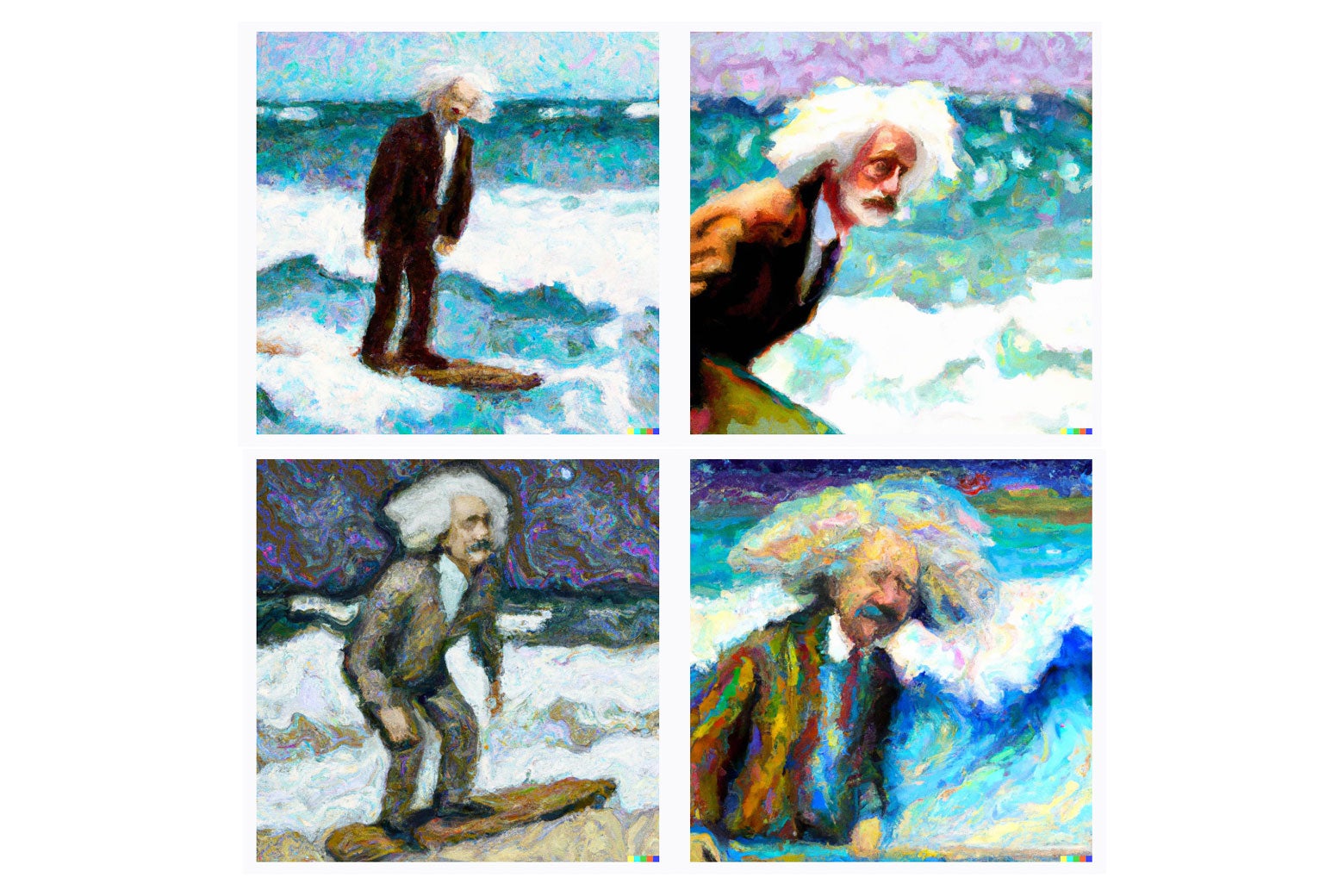

Gary Marcus, an emeritus professor at NYU and the author of Rebooting AI, is a vocal critic of the idea that bots like GPT-3 understand what they’re writing. In a recent preprint (an academic paper that hasn’t been peer-reviewed), Marcus and two co-authors used OpenAI’s DALL-E 2 to demonstrate the limitations of large language models. DALL-E 2, like GPT-3, responds to text-based prompts. But instead of producing language, it creates images. DALL-E 2 was trained to learn associations between images and their captions to produce entirely new images based on the text users enter. If you ask it for, say, “an impressionist painting of Albert Einstein surfing” you get the four images below.

Not bad, right? DALL-E 2 was recently used to win an art competition.

But as Marcus and his co-authors point out in their paper, DALL-E 2 doesn’t understand the relationship between the words that users enter. If, for instance, you ask it to produce an image of “an old man talking to his parents,” you get four images of three people talking. The AI fails to understand that, however old the old man looks, the people he’s talking to must, by definition of being his parents, look older.

Compared with DALL-E 2, ChatGPT appears to have a better grasp of the text users enter and the responses it spits out. This might be why the Internet responded to its release so enthusiastically. ChatGPT “is causing jaws to drop and brows to furrow,” wrote Axios.

I had trouble stumping it with some classic riddles from cognitive psychology. Take the Lily Pad problem:

Prompt: A lily pad, in a pond, doubles in size every day. On the 20th day, it covers the entire pond. On what day did it cover half the pond?

ChatGPT: A lily pad in a pond that doubles in size every day will cover half the pond on the 19th day, one day before it covers the entire pond.

The A.I. got that the question described exponential growth—a phenomenon that, as the pandemic demonstrated, many humans fail to understand. But I did fool ChatGPT with the bat and ball problem made famous by Princeton psychologist Daniel Kahneman’s book, Thinking, Fast and Slow:

Prompt: A bat and ball cost $1.10. The bat costs $1 more than the ball. How much does the ball cost?

ChatGPT: If the bat costs $1 more than the ball, and the bat and ball cost $1.10 in total, then the ball must cost $1.10 - $1.00 = $0.10.

The correct answer is 5 cents. (Trust me.)

ChatGPT might fail at tests of reasoning, but it’s great at generating humanlike text to different lengths and in various styles. This brings us back to the college essay—an easy task for ChatGPT, many commentators claimed on Twitter. “We’re witnessing the death of the college essay in realtime,” tweeted one Google employee. Ethan Mollick, a management professor at the University of Pennsylvania, had ChatGPT write an essay question, create a grading rubric for said question, answer the question, and grade its own answer. (It got an A minus.) How could the essay not be doomed?

This isn’t the first time that large language models have been predicted to fell the essay or worse. “To spend ten minutes with Sudowrite [a GPT-3-based A.I.] is to recognize that the undergraduate essay, the basic pedagogical mode of all humanities, will soon be under severe pressure,” wrote journalist Stephen Marche in a 2021 New Yorker piece. (On Tuesday, Marche wrote an article for the Atlantic titled “The College Essay Is Dead.”) And in 2019, when GPT-2 was created, OpenAI withheld it from the public because the “fear of malicious applications” was too high.

If any group were to put an A.I. to malicious use, essay-burdened undergraduates would surely be the first. But the evidence that A.I. is being used to complete university assignments is hard to find. (When I asked my class of 47 students recently about using A.I. for schoolwork, they looked at me like I was mad.) It could be a matter of time and access before A.I. is used more widely by students to cheat; ChatGPT is the first free text-writing bot from OpenAI (although it won’t be free forever). But it could also be that large language models are just not very good at answering the types of questions professors ask.

If you ask ChatGPT to write an essay contrasting socialism and capitalism, it produces what you expect: 28 grammatical sentences covering wealth distribution, poverty reduction, and employment stability under these two economic systems. But few professors ask students to write papers on broad questions like this. Broad questions lead to a rainbow of responses that are impossible to grade objectively. And the more you make the question like something a student might get—narrow, and focused on specific, course-related content—the worse ChatGPT performs.

I gave ChatGPT a question about the relationship between language and colour perception, that I ask my third-year psychology of language class, and it bombed. Not only did its response lack detail, but it attributed a paper I instructed it to describe to an entirely different study. Several more questions produced the same vague and error-riddled results. If one of my students handed in the text ChatGPT generated, they’d get an F.

Large language models generate the most likely responses based on the text they are fed during training, and, for now, that text doesn’t include the reading lists of thousands of college classes. They also prevaricate. The model’s calculation of the most probable text completion is not always the most correct response—or even a true response. When I asked Gary Marcus about the prospect of ChatGPT writing college essays his answer was blunt: “It’s basically a bullshit artist. And bullshitters rarely get As—they get Cs or worse.”

If these problems are fixed—and, based on how these models work, it’s unclear that they can be—I doubt A.I. like ChatGPT will produce good papers. Even humans who write papers for money struggle to do it well. In 2014, a department of the U.K. government published a study of history and English papers produced by online-essay writing services for senior high school students. Most of the papers received a grade of C or lower. Much like the work of ChatGPT, the papers were vague and error-filled. It’s hard to write a good essay when you lack detailed, course-specific knowledge of the content that led to the essay question.

ChatGPT may fail at writing a passable paper, but it’s a useful pedagogical tool that could help students write papers themselves. Ben Thompson, who runs the technology blog and newsletter Stratechery, wrote about this change in a post about ChatGPT and history homework. Thompson asked ChatGPT to complete his daughter’s assignment on the English philosopher Thomas Hobbes; the A.I. produced three error-riddled paragraphs. But, as Thompson points out, failures like this don’t mean that we should trash the tech. In the future, A.I. like ChatGPT can be used in the classroom to generate text that students then fact-check and edit. That is, these bots solve the problem of the blank page by providing a starting point for papers. I couldn’t agree more.

I frequently used ChatGPT while working on this piece. I asked for definitions that, after a fact-check, I included. At times, I threw entire paragraphs from this piece into ChatGPT to see if it produced prettier prose. Sometimes it did, and then I used that text. Why not? Like spell check, a thesaurus, and Wikipedia, ChatGPT made the task of writing a little easier. I hope my students use it.

Future Tense is a partnership of Slate, New America, and Arizona State University that examines emerging technologies, public policy, and society.